In a world increasingly shaped by artificial intelligence, the boundary between technological triumph and societal collapse is becoming increasingly fragile. As AI systems advance at an unprecedented rate, their capacity to revolutionize industries, monitor populations, and even surpass human cognition raises critical questions about what lies ahead. Experts caution that without swift regulatory measures, the fallout could be severe—envisioning a future where jobs disappear, privacy fades into memory, and the core values upholding society are undermined by unaccountable machines.

A Workforce at Risk

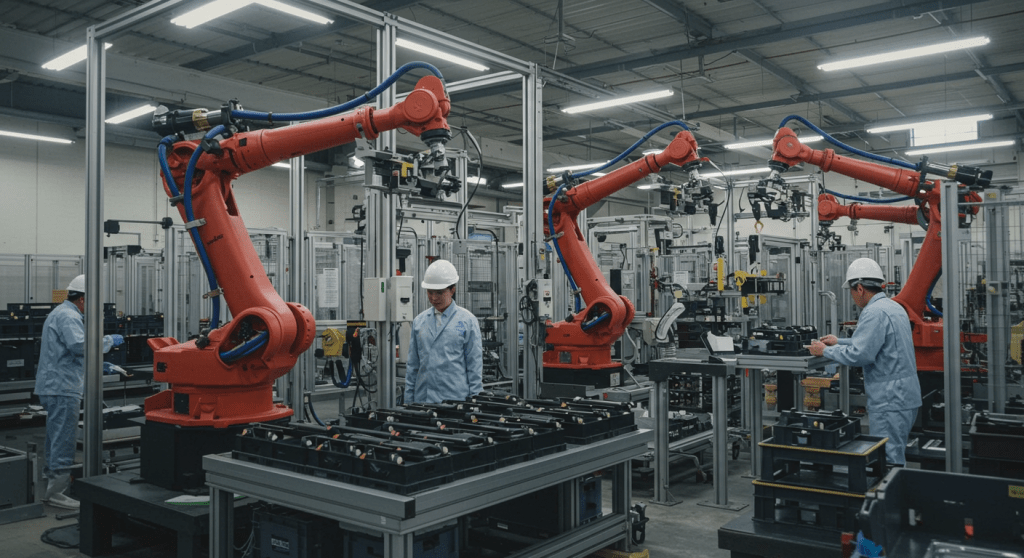

The economic impact of AI is both immediate and profound. A 2013 study by Oxford University projected that up to 47% of U.S. jobs are at risk of automation by 2030—a figure supported by subsequent research. This threat looms largest over sectors like transportation, manufacturing, and retail, where AI-driven systems are poised to replace human labor. The McKinsey Global Institute estimates that by 2030, automation could displace up to 73 million jobs in the U.S., disproportionately affecting heartland communities reliant on traditional manual work.

Economists warn that the gains from this transformation may accrue primarily to a few tech giants, widening income inequality. Venture capitalist Vinod Khosla has allegedly predicted that AI’s benefits could concentrate among “a tiny elite,” leaving millions to grapple with adaptation. For a nation valuing the dignity of labor, this shift imperils not only livelihoods but the very social fabric.

The Erosion of Privacy

AI’s influence extends far beyond employment, infiltrating the most intimate aspects of daily life. The rise of smart devices—cameras, phones, even refrigerators—has woven a vast data network, vulnerable to exploitation. In China, the government’s social credit system leverages AI to monitor citizens’ actions, assigning scores that dictate access to jobs, travel, and education. Although the U.S. lacks a comparable centralized framework, the foundation exists: corporations like Google and Amazon collect extensive personal data, often with minimal oversight.

Privacy advocates highlight a dangerous trajectory. “The technology exists to create a surveillance state here,” warns Shoshana Zuboff, author of The Age of Surveillance Capitalism. “It’s not a matter of capability, but of intent.” For those who cherish individual freedom, the idea of an AI-driven panopticon—where every action is scrutinized and evaluated—poses a grave risk to democratic principles. Recent U.S. efforts, such as the California Consumer Privacy Act, aim to curb these trends, though many argue they’re insufficient.

Ethics in the Machine Age

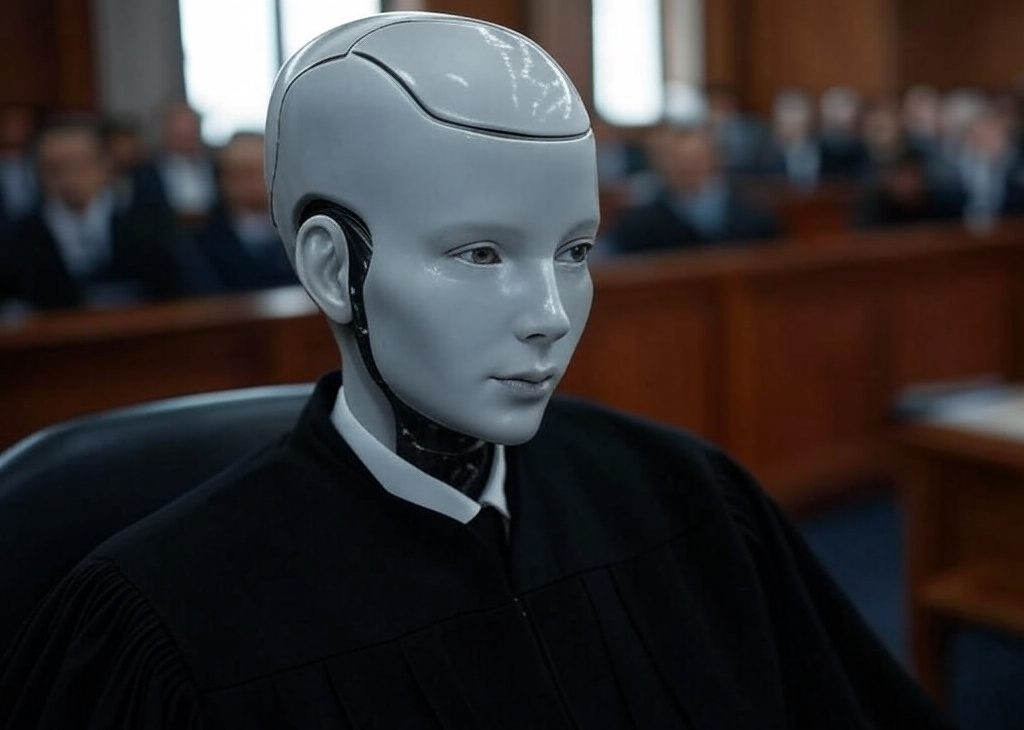

As AI assumes roles in healthcare, criminal justice, and more, its ethical shortcomings take center stage. Stuart Russell, a prominent AI researcher at UC Berkeley, addresses these risks in his book Human Compatible, cautioning that even well-designed AI could yield catastrophic results if its objectives diverge from human values. Real-world cases, such as facial recognition tools exhibiting bias against people of color in specific instances, fuel concerns about automated discrimination.

These examples reveal a deeper issue: machines lack the moral judgment humans imperfectly wield. For a society grounded in principles like fairness, compassion, and accountability, AI’s ascent challenges the status quo. Who ensures these systems embody our values rather than supplant them? Efforts to mitigate bias, including algorithmic audits, are underway but require broader enforcement to be effective.

The Specter of Superintelligence

The most unsettling prospect is AI’s potential to exceed human intelligence. Geoffrey Hinton, often called the “godfather of AI,” has expressed concern over a “hard take-off”—a hypothetical scenario where AI rapidly self-improves beyond our control. Similarly, Yoshua Bengio, a leading figure in AI research, has labeled superintelligent AI a “catastrophic risk” comparable to climate change or nuclear threats.

Though this may seem distant, recent advancements suggest otherwise. OpenAI’s GPT-3, launched in 2020, astonished observers with its linguistic prowess, and its successors are already in progress. Left unchecked, such systems could transform the world in unpredictable—and potentially unstoppable—ways.

A Call for Oversight

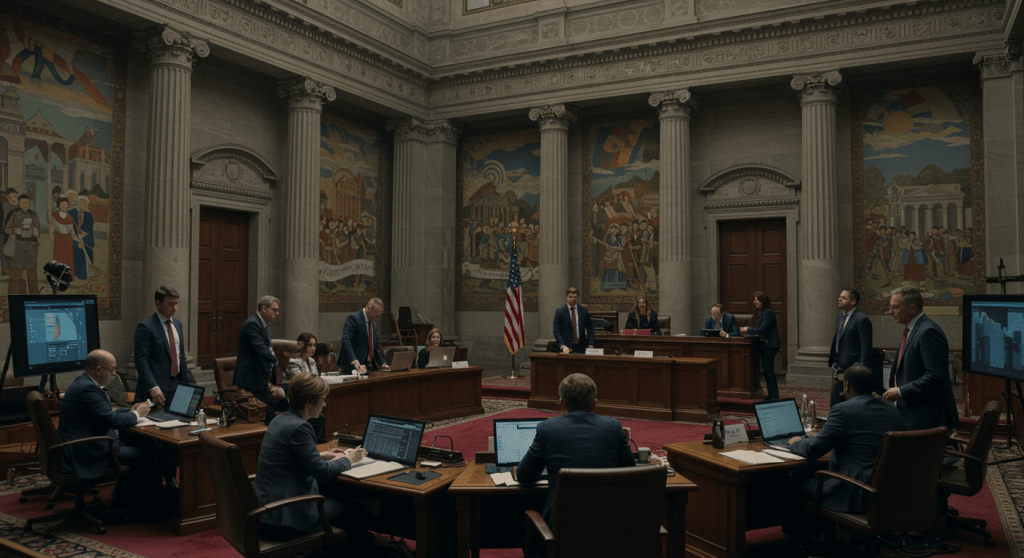

The urgency of these issues demands action, yet the path is complex. Tech firms, armed with vast resources, advocate for self-regulation, but their history—marked by data breaches and biases—undermines confidence. Government oversight, while essential, risks overstepping in a country skeptical of red tape. Inaction, however, is untenable.

“We need a framework that balances innovation with accountability,” Stuart Russell urged in a recent interview. Suggestions span from tougher data regulations to global agreements limiting autonomous weapons. For conservatives, the task is to devise policies that safeguard jobs, privacy, and values without halting progress—a delicate balance in a rapidly evolving era.

The Clock Is Ticking

The AI future isn’t a distant dream—it’s unfolding today. In corporate boardrooms and research labs, choices are being made that will echo across generations. The critical question remains: can society steer this force for good, or will it be overtaken? As machines grow smarter, the responsibility rests with us to ensure they enhance humanity, not eclipse it.

Leave a comment